I’ve been making some improvements to the blog. In the previous post, I talked about how I was using ChatGPT to generate tags, summaries and lists of related articles. If that’s working correctly then the previous post should appear in the list of related articles below. If it’s not then - here’s a direct link: Improving My Blog Using AI.

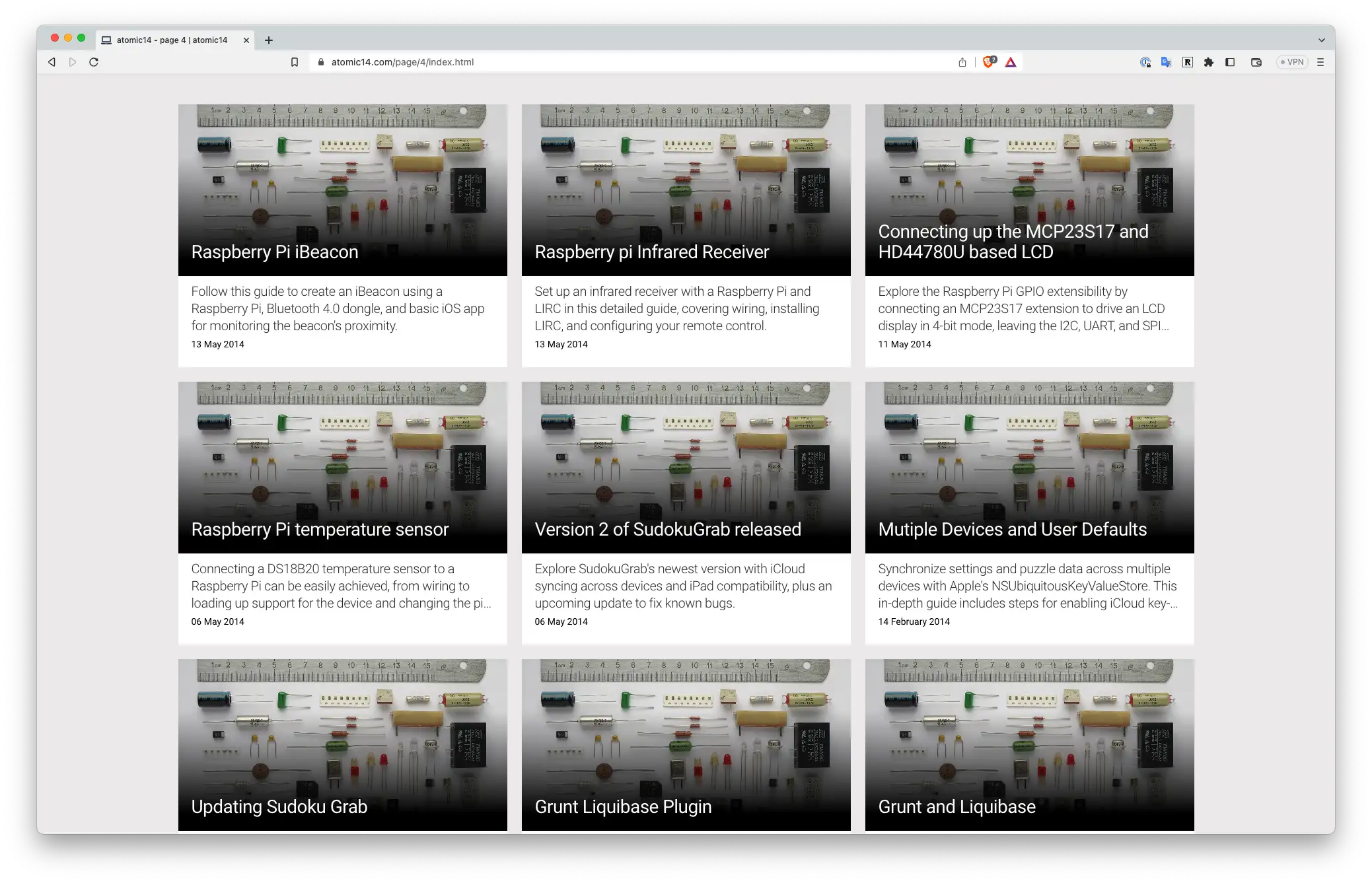

We finished the post with my complaint that I was missing a lot of header images on some of the older posts, making the front page look a bit boring:

I’ve always struggled with getting ChatGPT to generate good image prompts. It always seems to stray into a very instructional-type reply as if it’s briefing a designer which doesn’t seem to produce the best results. However things seemed to have improved a lot recently, so I thought I’d give it another go.

Here’s a list of suggestsion from ChatGPT4 for the Home Automation with ChatGPT post:

Give me an image prompt for DALL-E 2 that would be a faithful representation of the article below.

---

CONTENTS

---

Produces this:

An animated Raspberry Pi is controlling a variety of household lights, including in the kitchen, bedroom, dining table, bathroom, and lounge. Each light is symbolized by a unique icon reflecting its location. Chat bubbles emerge from the Raspberry Pi, containing phrases like 'Let there be light,' and 'Time for breakfast.' As the phrases are spoken, the respective lights glow brighter. On a screen behind the scene, snippets of Python code and an OpenAPI yaml file are visible, symbolizing the ChatGPT-generated code that's controlling this smart home automation.

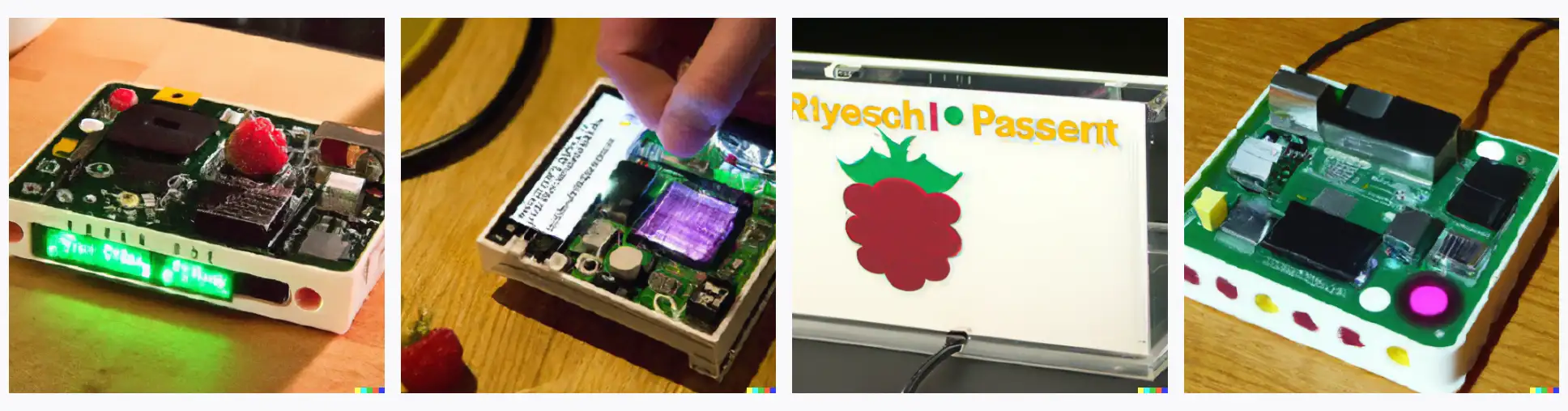

It’s a bit long and a bit too detailed, but we can give it ago. This is what DALL-E 2 produced:

It’s not too bad, we have some things that look vaguely Raspberry Pi-like - there’s even a raspberry in one of the images. But when we compare it with what Midjourney can produce it looks a bit rubbish:

Midjourney has produced a much more aesthetically pleasing image (at least to my eye). We’re not really trying for a perfect representation of the article, we’re just trying to make the front page look a bit more interesting. The other nice thing with Midjourney is that we can specify the aspect ratio of the image we want. With DALL-E 2 we’re stuck with a square image. There are various ways of getting around this limitation with shrinking the image, followed by inpainting and then cropping but it’s a pain.

So, I’d quite like to use Midjourney. As always though, there is a catch.

There’s no API for Midjourney, access is only available via Discord. And the terms of service are pretty strict around automation and scripting:

So, we’re definitely not allowed to automate anything!

But, it’s fun to think about how you would automate it - not that I would ever contemplate doing this, nor would I encourage or suggest anyone else to do so either!

There are a couple of options that spring immediately to mind:

- Using GUI automation tools to drive the discord desktop client

- Using Webdriver to drive the discord web client

There’s a popular library called PyAutoGUI that looks like it would work for driving the desktop client.

However, looking at the docs it feels like it would be very fragile, you are moving the mouse to specific X and Y coordinates, then clicking on things. You’d have to be very careful to arrange the windows in exactly the right place and then not touch the computer. So it’s not ideal.

Webdriver feels like the easiest approach and if you do a bit of googling, you’ll find some suitable code that can be hacked together - not that I’d ever do anything like that of course!

Assuming you’ve managed to get Midjourney to generate all the images you need (either by copying and pasting or by more nefarious means), you now have another problem. How do you download all your images?

The easiest way to do this is just to go to the Midjourney website and you can see all your images.

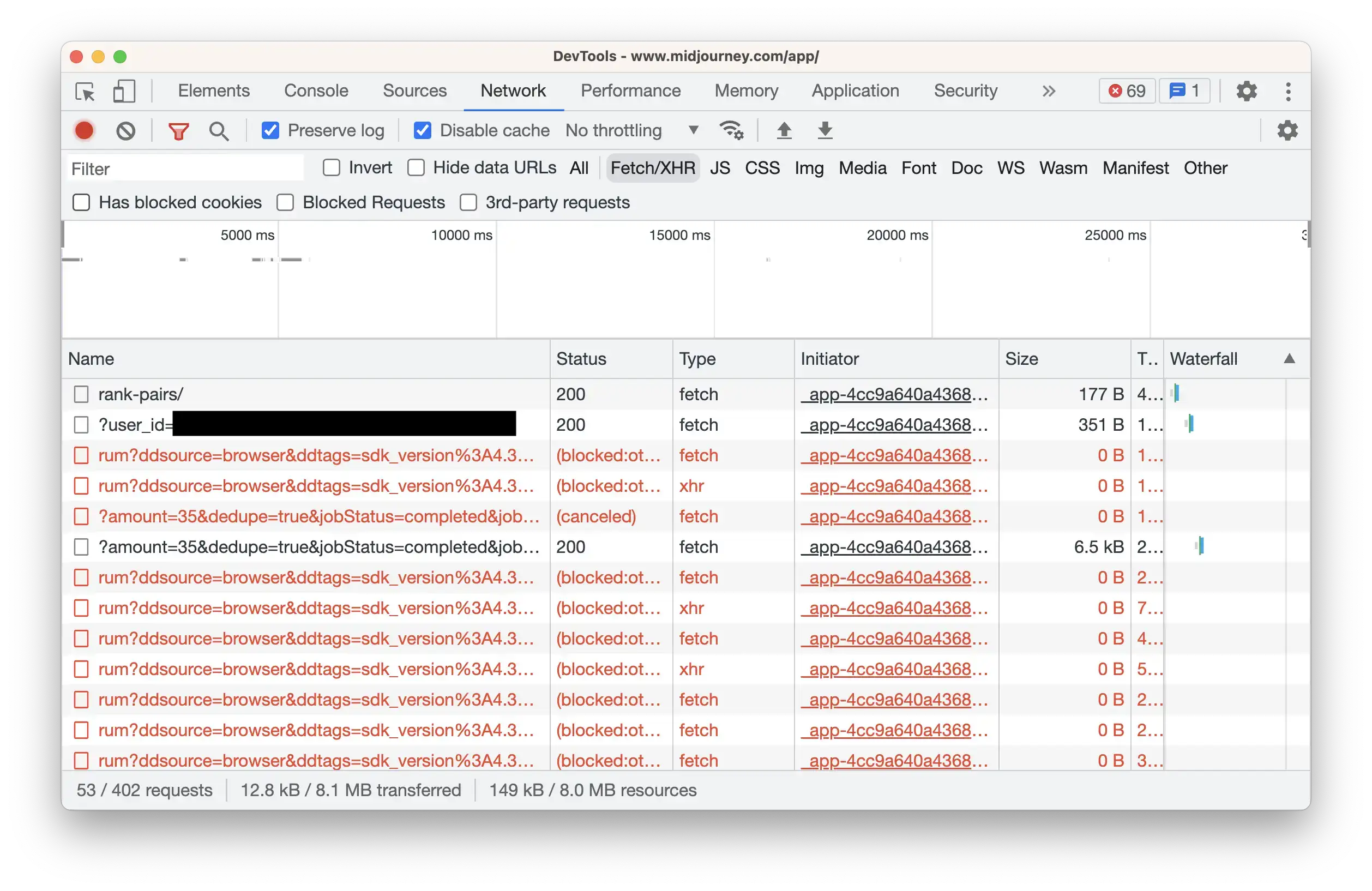

Whenever I’m faced with a web scraping challenge, my first stop is to open up the dev tools and see if there are any “Fetch/XHR” requests going on.

Amongst all the tracking and analytics requests that are being blocked by the browser we can see a pretty interesting one:

https://www.midjourney.com/api/app/recent-jobs/?amount=35&dedupe=true&jobStatus=completed&jobType=grid&orderBy=new&prompt=undefined&refreshApi=0&searchType=advanced&service=null&type=all&userId=80585519-76f3-4e8b-b2ab-3eedda0c9d61&user_id_ranked_score=null&_ql=todo&_qurl=https%3A%2F%2Fwww.midjourney.com%2Fapp%2F

And if we scroll down the images to the load more button and click on it. Then we see another request, but this time we’ve got an extra parameter - page=2:

https://www.midjourney.com/api/app/recent-jobs/?amount=35&dedupe=true&jobStatus=completed&jobType=grid&orderBy=new&page=3&prompt=undefined&refreshApi=0&searchType=advanced&service=null&toDate=2023-05-27+13%3A01%3A44.226263&type=all&userId=80585519-76f3-4e8b-b2ab-3eedda0c9d61&user_id_ranked_score=null&_ql=todo&_qurl=https%3A%2F%2Fwww.midjourney.com%2Fapp%2F

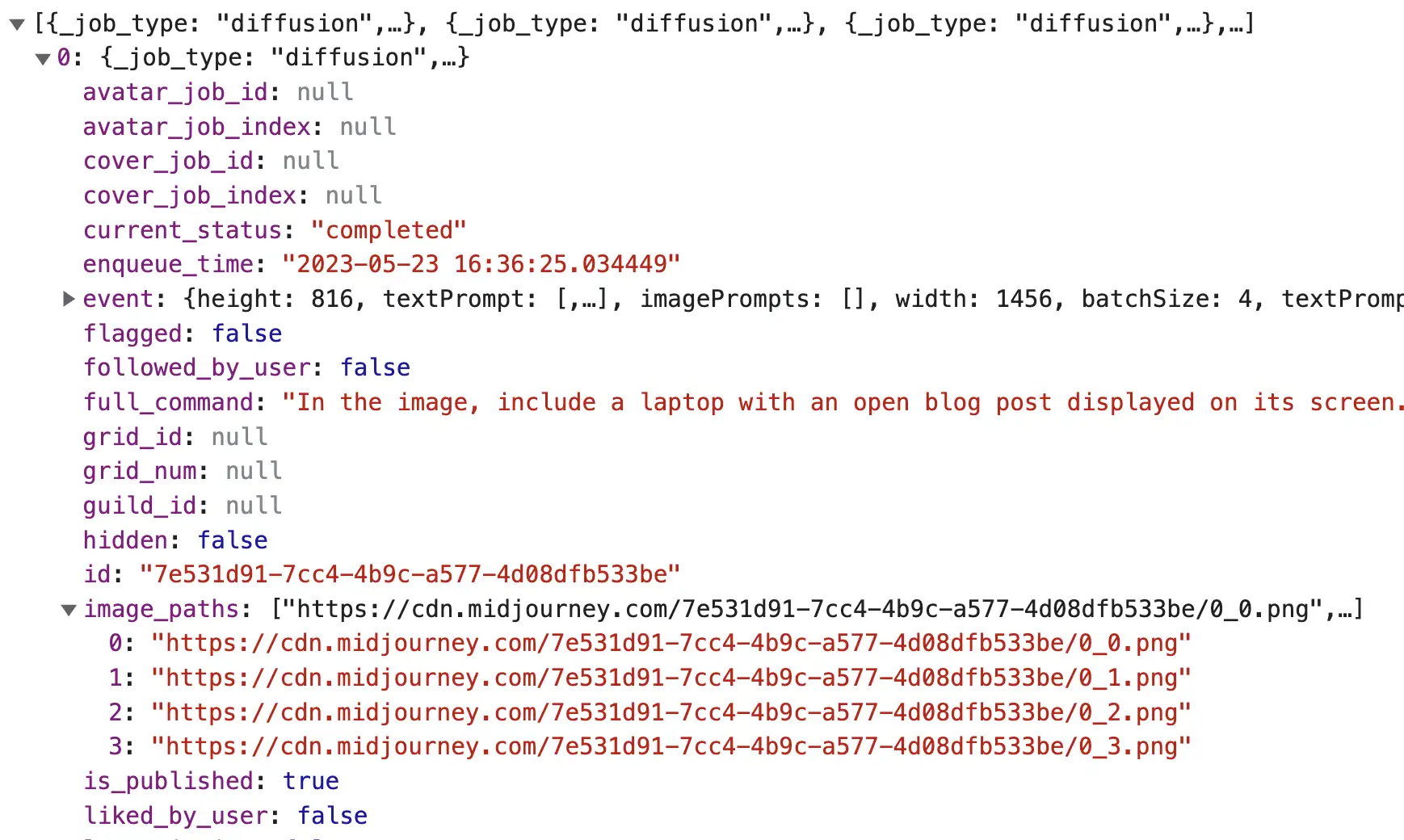

The response to both these requests looks very useful. We get a list of results along with links to each of the 4 images that make up the grid - perfect!

At this point, we could try and get clever and write a script to drive the API and go through each page. But really we don’t need to bother, we’re only interested in the latest couple of pages, so we can just copy and paste the response into a file. Sometimes it really is just quicker not to write a script!

With the JSON response in a file, we can run through each result, match up the original caption with the generated image and download the four images.

Our next challenge is: Which of the four images do we want to actually use?

I was thinking about getting a description for each image and then going back to ChatGPT to ask which image most closely matched the article, but it was quite hard to find something I could use for this.

So I asked ChatGPT for some suggestions:

What are some simple statistics that can be extracted from an image to determine how "interesting" it is?

And it came back with some interesting suggestions ranging from fairly simple to quite complex:

Determining what makes an image "interesting" can be quite subjective and often depends on the context and viewer. However, there are indeed some quantifiable characteristics that researchers have associated with the perceived interest or attractiveness of an image:

1. **Color diversity**: Images with a greater variety of colours can be more visually interesting. This can be measured as the number of unique colours or by using entropy in the colour space.

2. **Texture complexity**: Similarly, an image with more complex texture patterns could be more interesting. Texture can be measured by analyzing the spatial distribution of colours or brightness in an image.

3. **Contrast**: High-contrast images can be more visually striking. Contrast can be measured statistically as the standard deviation of the brightness (i.e., grayscale intensity) of the pixels.

4. **Spatial Frequency**: Studies have shown that people tend to find images with more medium-frequency content to be more pleasing. You can extract this via a Fourier transform.

5. **Symmetry**: Images with some degree of symmetry can be appealing, especially bilateral symmetry. Various measures of symmetry can be derived depending on the exact definition used.

6. **Presence of Faces**: Humans are naturally attracted to faces. The number and size of faces detected can be an interesting measure. This requires a face detection algorithm.

7. **Object Recognition**: Recognizable objects or features could make an image more interesting. This requires more sophisticated techniques like object detection or semantic segmentation.

8. **Depth of Field**: Images with a shallow depth of field (where the subject is in focus and the background is blurred) are often seen as more aesthetically pleasing. This requires techniques like edge detection and depth estimation.

9. **Rule of Thirds**: This is a guideline which proposes that an image should be imagined as divided into nine equal parts by two equally spaced horizontal lines and two equally spaced vertical lines, and that important compositional elements should be placed along these lines or their intersections. There are ways to determine how well an image follows this rule.

10. **Saliency**: Saliency models predict which areas of an image are likely to attract attention. These models are often based on the idea that humans are more likely to focus on areas of high contrast or unique features.

Remember, these are just guidelines and not hard-and-fast rules. They might be a starting point to build an algorithm to evaluate the "interestingness" of an image, but personal and cultural differences, as well as the context in which the image is viewed, will also greatly influence this judgement.

I tried out the first few of these as they were pretty easy to implement. In the end, I just plumped for contrast as it seemed to work ok. The problem with Midjourney is that generally, all the images look really nice, so it is kind of arbitrary which one you pick.

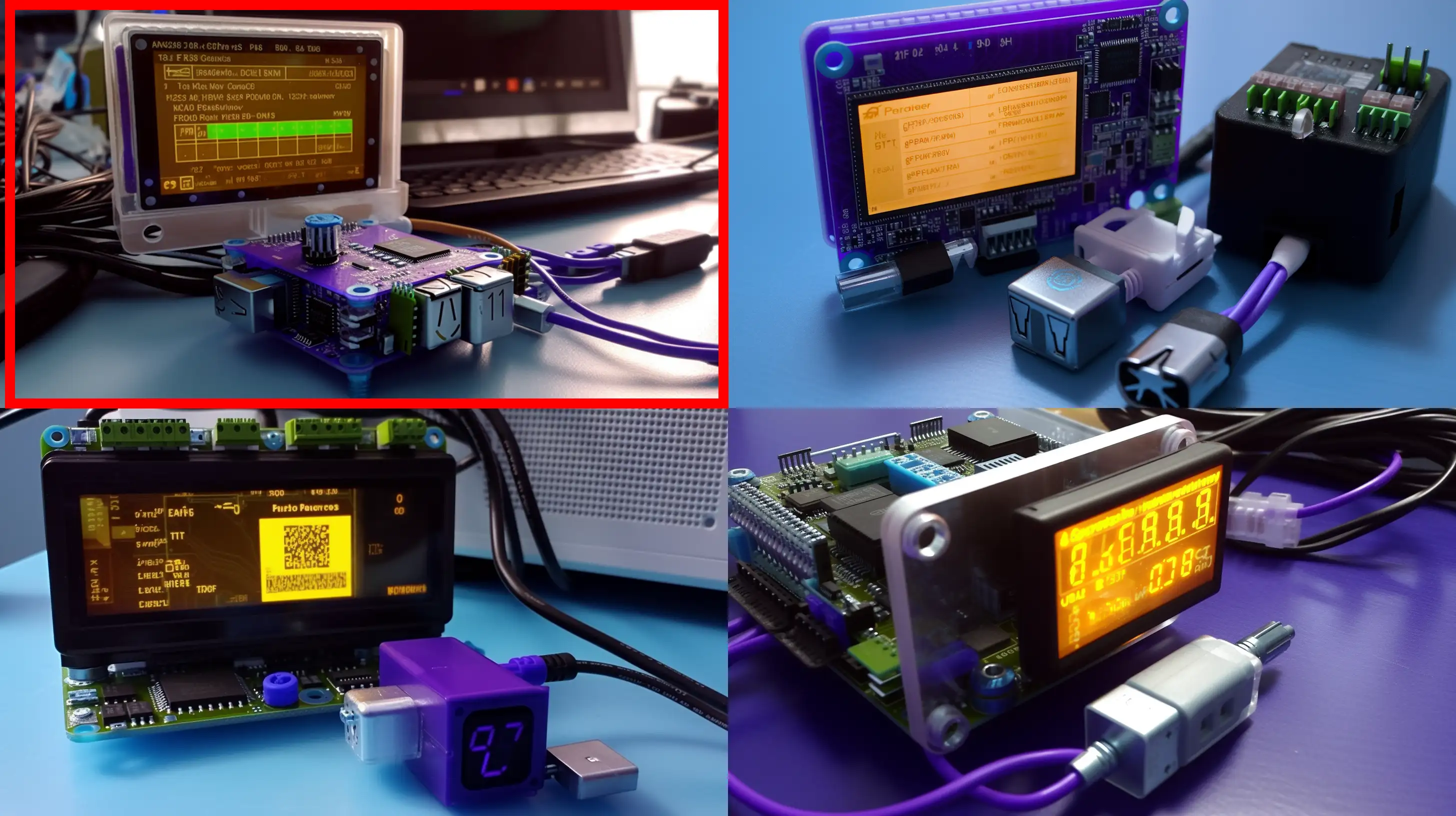

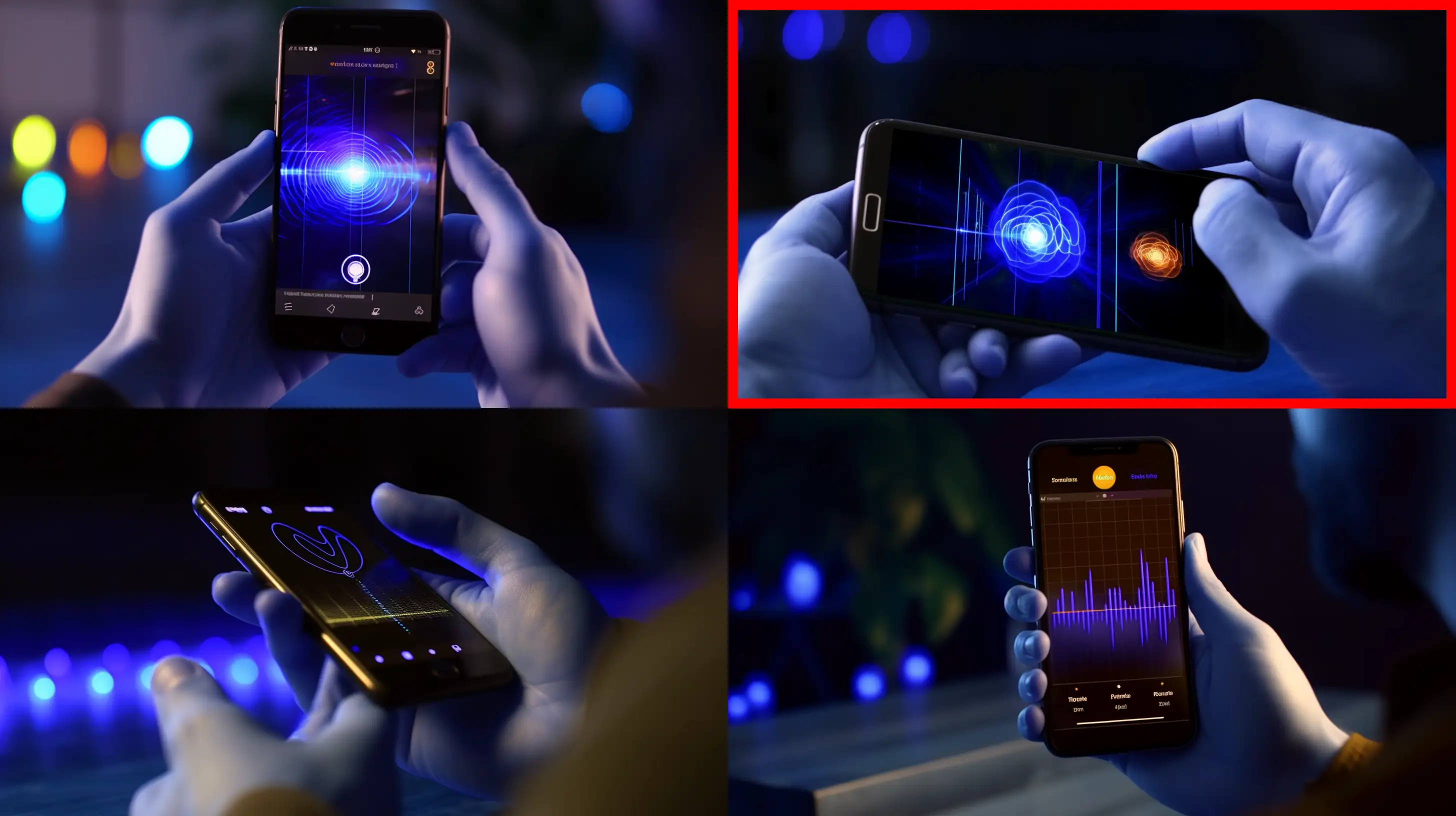

Here are a couple of examples with the best image highlighted:

I would definitely like to revisit this at some point though as it’s pretty interesting.

All the articles have been updated to their new images. Here’s what the old pages used to look like:

And here’s what it looks like now!

I think it’s pretty nice! You could argue that most of these old posts are kind of irrelevant now and I could have just left them. But I’ve learned a lot from this fun bit of scripting and the blog looks much nicer. So I’m happy!